You Cannot Create an "Unbiased" Wikipedia

How would you write a completely unbiased encyclopedia article about the violence currently occurring in Gaza?

I mean completely unbiased. I want you to bracket off and ignore every opinion you have about it whatsoever. Not taking anyone’s side. Nobody is wrong or right. Just the sterile facts. What do you write?

Well, you’ll probably want to begin by saying something like, on October 7th, 2023, a certain number of armed militants from the Gaza strip in Palestine invaded Israel and murdered a certain number of civilians. They also took a number of hostages back into Palestine, and then we get into Israel’s response to that attack. But you’re already being biased, here, on many points:

People on one side will object to you calling the Palestinians “militants,” implying they are a legitimate military force, and will prefer “terrorists,” but that’s biased in the other direction. We will have to retreat to “men.” (I don’t think there were any women among the assailants, but if there were, we will say “persons.”)

People on the other side will object to you calling it an “invasion,” calling what they did “murder,” and the people they killed “civilians.” This is biased against those who see this as a legitimate military operation and see the Israelis as an occupying force. We can’t really call them “hostages” either, maybe everyone can agree on “captives.”

So let’s say: On October 7th, 2023, a certain number of men entered Israel from Palestine and ended the lives of a certain number of individuals, and took a certain number captive, and then we can get into Israel’s retaliation.

This is still biased, because it presents Palestine as the initial aggressor rather than it having been in response to pressures exerted upon it by Israel. But you can’t call Hamas’ actions on October 7th a retaliation, because you’re discounting the reasons Israel may have been exerting those pressures. We cannot write this article without starting it at least one hundred years ago, and now understand: I asked you to write an unbiased article about the present Gaza conflict and we can’t even write an unbiased sentence about October 7th, 2023.

It was a fool’s errand to begin with, framing this as a conflict between Israel and Palestine, because you can’t use those words either. A large number of people on one side don’t believe Palestine exists legitimately, and a large number on the other don’t believe Israel exists legitimately.

I can’t continue this example without a lot of people getting mad at me, if they’re not already, but you get the idea. There’s no way to write this article and retain any amount of useful information whatsoever. A machine couldn’t do it. So let’s cut to the chase:

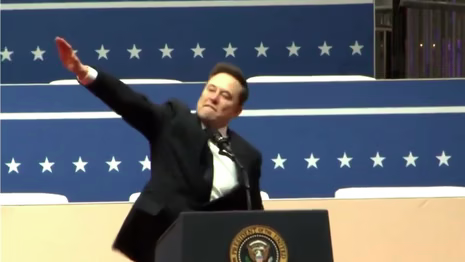

Elon Musk wants to kill Wikipedia, because it’s biased.

His beef with the online encyclopedia is longstanding and grows more intensified by the fact that it’s one of the few things he can’t control with brute force of capital. Musk lives in a world in which everything is for sale, including governments, but he can’t buy Wikipedia—as a nonprofit it isn’t publicly traded. He’s tried looking at ways to choke off its income, but it relies on donations. Capitalism is the only weapon he has, so finally, he’s just decided to make his own, better, alternative and compete it to death.

He doesn’t go much into what he thinks is wrong with Wikipedia but I’m almost certain I can guess. Wikipedia’s article about January 6 is titled “January 6 United States Capitol attack,” and begins like this:

On January 6, 2021, the United States Capitol in Washington, D.C., was attacked by a mob of supporters of President Donald Trump in an attempted self-coup, two months after his defeat in the 2020 presidential election. They sought to keep him in power by preventing a joint session of Congress from counting the Electoral College votes to formalize the victory of then president-elect Joe Biden. The attack was unsuccessful in preventing the certification of the election results. According to the bipartisan House select committee that investigated the incident, the attack was the culmination of a plan by Trump to overturn the election.

The article about the Great Replacement starts like this:

The Great Replacement (French: grand remplacement), also known as replacement theory or great replacement theory, is a debunked white nationalist far-right conspiracy theory coined by French author Renaud Camus. Camus' theory states that, with the complicity or cooperation of "replacist" elites, the ethnic French and white European populations at large are being demographically and culturally replaced by non-white peoples—especially from Muslim-majority countries—through mass migration, demographic growth and a drop in the birth rate of white Europeans. Since then, similar claims have been advanced in other national contexts, notably in the United States. Mainstream scholars have dismissed these claims of a conspiracy of "replacist" elites as rooted in a misunderstanding of demographic statistics and premised upon an unscientific, racist worldview.

The article on climate change states clearly that it is driven by human activities. The Covid-19 article says that the virus came from bats or another related animal and theories of it being engineered by humans are unsupported by the evidence. The Gamergate article says it was a harassment campaign started as a right-wing backlash against feminism.

You get the idea. Wikipedia is woke, but only because the preponderance of evidence is woke, and Musk’s contention is that this means the bulk of evidence is, in fact, fake manufactured propaganda.

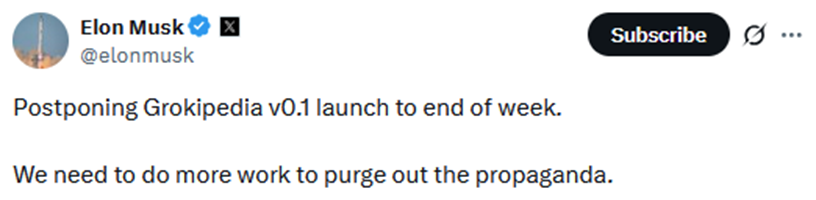

His coming alternative, “Grokipedia,” will be written by Grok, his large language model AI, which will reform the entire corpus of human knowledge from a neutral point of view, objective, and free of bias. It was supposed to launch Monday October 20, but…

So let’s get this right out of the way first: We all know that Elon Musk doesn’t want to build an unbiased Wikipedia, he wants to build a very far-right Wikipedia. But that sort of is the crux of the issue: That, to him, is unbiased. It’s why he consistently considers himself a centrist, or even a liberal, despite his politics being to the right of the majority of people—he thinks everything he believes is simply correct, and to that end, unbiased, neutral, and objective. His worldview is the nucleus of truth, and everyone who disagrees with him on any issue is orbiting him on various different levels of wrong.

This is true, to some extent, of all of us.

Socrates is popularly regarded one of the wisest people in history, and it’s for the simple statement that “the wisest man knows that he knows nothing.” We can, and should, be conscious of our own biases. It’s an extraordinary feat of reasoning, an ability that we have—to fundamentally unpack ourselves—that makes me extremely skeptical about efforts toward “artificial general intelligence” (the name they came up with for what we used to just call AI, but now we have to discern it from dumb stochastic language models).

By exercising this ability we prevent ourselves from becoming shrieking bigots like Elon Musk, who refuses to. But we also need to recognize that we can never fully do this. We cannot see ourselves completely objectively, just as we can’t see 100% of our field of vision—there’s always, necessarily, a blind spot where the optic nerve comes out, and it needs to be there for vision to work at all.

We all have some kind of strongly held belief, which we need to function, and we think it is the truth, or else we wouldn’t believe it.

Those who have followed me for a long time know that I believe strongly in the existence of objective truths. It’s why I so thoroughly despise the project of the populist right to unmake the concept of truth and replace it with a sophist’s persuasion-centered epistemic model, which regards facts to be irrelevant if not obstacles to overcome. They are hostile to truth to the extent that people who fact-check are cast as villains and the enemies of free speech.

That said, we can never completely reach the truth. We can get closer to it, but we can’t touch it. It’s like a hyperbolic graph that infinitely approaches a point without reaching it. Everyone has their biases and you can’t just smash everyone’s opinion together and find the truth by finding the average. It just doesn't work that way.

Hannah Arendt pointed out that there are different types of truths in the world. There are things you can discern from first principles. Mathematics might be an example of a truth that we can completely reach. But truths about events can’t be discerned from first principles. They need to be assembled from various people’s opinions about what happened, as well as as many facts as we can find, and we will never have all of them.

Wikipedia can’t eliminate bias, but it does as well as it possibly can through the only technique we have available to us to understand the world: Broad consensus.

It isn’t perfect and it can’t be, but Grok’s attempt to do this will be much worse.

First of all, I think it’s pretty clear that Elon Musk doesn’t really understand what large language model AI actually is or how it works. It cannot, as he claims, discover new technologies or new physics. It might be able to help human beings do these things! But it can’t reason, no matter how far you scale it up, because that’s not what it is or what it does. It doesn’t matter how fast you make a sportscar, how far you scale up its braking system and its suspension and its traction and its air conditioning and its radio, it will never take off and fly. That’s not what it is or what it does.

LLMs are like language calculators. They’ve been fed an unfathomable amount of text and, depending on how well they “understand” what you’re asking, they make a guess about what string of words would most likely follow as a response to that question.

In attempting to build an unbiased encyclopedia, what Grok can sort of do is roughly what Wikipedia editors do anyway, which is consume a lot of information and try to figure out what the broad consensus is.

What it can do better than human beings is the sheer amount of information it can absorb—you’ll never find someone, no matter their expertise on the subject, who has read, let alone remembered, every book ever written about, for example, World War II. Grok plausibly could.

What it can’t do is weigh the reliability of any of that information. Absent outside interference, it will equally weigh award winning historian Sir Ian Kershaw with your cousin who self-published a book he wrote in two weeks that bafflingly calls Germany “Germania” and it’s uncertain whether he thinks that’s really what it’s called because he just kind of looks at you when you ask.

Determining reliability of a source is a power that only human beings have, and of course, this is going to result in bias, and the bias is going to be more extreme depending on how well an individual is able to self-reflect and really unpack their own biases. I wouldn’t, for example, regard an essay on feminist theory to have any reliability if it was written by Andrew Tate. But there are others who wouldn’t regard an essay on feminist theory to have any reliability if it was written by a man.

These two determinations are both biased, to different degrees. But a “Grokipedia” that wrote an article on feminist theory that consulted and equally weighed the authority of Andrew Tate and, say, Judith Butler, would be a fucking dog’s breakfast. It would be incomprehensible.

That’s the core of the problem. We may not be able to actually reach the objective central truth about anything any more than we can land a spacecraft on the sun, but we can arrive at different distances from it. If we refine our methods, reflect on our biases, and work together with a lot of other reasonable people, then we might be able to land on Mercury. If we get a machine to eat all the opinions of everyone in the world and shit out the average, we’re going to land on Jupiter. You can’t improve an encyclopedia by replacing reasoned analysis of sources with probabilistic guesses.

In order to get an LLM AI to weigh some people’s opinions higher than others, you have to program this into them from the outset. You need a human puppet master. This brings us back to Elon Musk, who believes the vast majority of mainstream opinion on social topics is wrong, and who almost certainly would rate Andrew Tate’s knowledge about feminist theory as more reliable than Judith Butler.

And Musk is, of course, who will be teaching Grok about reliable sources. And he has already been doing this ever since he gave birth to the damn tortured thing. It was Musk who, in June, got so frustrated with Grok’s insistence on taking the mainstream consensus on “white genocide in South Africa” that he personally intervened to force it to declare that such a thing was really happening. He was caught out when, due to his incompetent implementation of this command, Grok started complaining about white genocide in every single interaction with the public.

Musk, with his lower-than-normal ability to see the extreme bias in himself, will establish his worldview as the truth that he wants it to aim toward. I predict it’s going to be an incredibly frustrating disaster for him as he tries to correct Grok on every assertion it tries to make that approaches the consensus on any issue. Imagine working as the sole editor of a Wikipedia-sized project and you feel like every page is riddled with errors.

I think that “Grokipedia” will be subject to more recalls than the Cybertruck. But no matter the outcome here—whether it’s incoherent, broken, so liberal that it drives him insane, or so ridiculously fash that Tesla shares take another hit—one thing we can be sure about is how hilarious it’s going to be for the rest of us, not to mention how revealing. After all, the last time he told Grok to act more like him, it started referring to itself as “MechaHitler.”

I am writing a whole-ass book about how toxic libertarianism and masculinity infected the early internet and now we have to deal with this sort of crap. The working title is How Geeks Ate the World and I’m going to be dropping parts of the draft into this very newsletter as the project comes along—but only for paid subscribers. A new chapter is coming out this very weekend! So if you want to read along in real time, please consider subscribing. Otherwise I’ll be keeping you in the loop. Check it out here: